Class representing a HDFDataset, wich reads and writes data and attributes. More...

#include <hdfdataset.hh>

Public Types | |

| using | Base = HDFObject< HDFCategory::dataset > |

| Base class alias. More... | |

Public Member Functions | |

| auto | get_type () |

| Get the type object. More... | |

| HDFDataspace | get_memspace () |

| Get the memory dataspace id. More... | |

| HDFDataspace | get_filespace () |

| Get the file dataspace id. More... | |

| auto | get_attribute_buffer () |

| Returns the attribute buffer of this dataset. More... | |

| HDFIdentifier | get_parent_id () |

| get a shared_ptr to the parent_object More... | |

| std::size_t | get_rank () |

| get the rank of the dataset, i.e. the dimensionality More... | |

| auto | get_current_extent () |

| get the current extend of the dataset More... | |

| auto | get_offset () |

| Get the offset object. More... | |

| auto | get_capacity () |

| get the maximum extend of the dataset More... | |

| auto | get_chunksizes () |

| Get the chunksizes vector. More... | |

| auto | get_compresslevel () |

| Get the compress level object. More... | |

| void | set_capacity (std::vector< hsize_t > capacity) |

| Set the capacity object, and sets rank of dataset to capacity.size. More... | |

| void | set_chunksize (std::vector< hsize_t > chunksizes) |

| Set the chunksize object. More... | |

| template<typename Attrdata > | |

| void | add_attribute (std::string attribute_path, Attrdata data) |

| add attribute to the dataset More... | |

| void | close () |

| Close the dataset. More... | |

| template<HDFCategory cat> | |

| void | open (const HDFObject< cat > &parent_object, std::string path, std::vector< hsize_t > capacity={}, std::vector< hsize_t > chunksizes={}, hsize_t compress_level=0) |

| Open the dataset in parent_object with relative path 'path'. More... | |

| void | open (const HDFIdentifier &parent_identifier, std::string path, std::vector< hsize_t > capacity={}, std::vector< hsize_t > chunksizes={}, hsize_t compress_level=0) |

| Open the dataset in parent_object with relative path 'path'. More... | |

| void | swap (HDFDataset &other) |

| swap the state of the objects More... | |

| template<typename T > | |

| void | write (T &&data, [[maybe_unused]] std::vector< hsize_t > shape={}) |

| Writes data of arbitrary type. More... | |

| template<typename Iter , typename Adaptor > | |

| void | write (Iter begin, Iter end, Adaptor &&adaptor) |

| Write function for writing iterator ranges [start, end), in accordance with respective stl pattern. More... | |

| template<typename T , std::size_t d> | |

| void | write_nd (const boost::multi_array< T, d > &data, std::vector< hsize_t > offset={}) |

| Write a boost::multi_array of arbitrary type and dimension to the dataset. The dataset needs to be of dimension N >= d, because dataset dimensions cannot be changed after they have been created. In all other regards this behaves like the normal 'write' function that accepts a value. More... | |

| template<typename Type > | |

| auto | read ([[maybe_unused]] std::vector< hsize_t > start={}, [[maybe_unused]] std::vector< hsize_t > end={}, [[maybe_unused]] std::vector< hsize_t > stride={}) |

| Read (a subset of ) a dataset into a buffer of type 'Type'. Type gives the type of the buffer to read, and currently only 1d reads are supported, so ND dataset of double has to be read into a 1d buffer containing doubles of size = product of dimenions of datasets. More... | |

| HDFDataset ()=default | |

| default consturctor More... | |

| HDFDataset (const HDFDataset &other)=default | |

| Copy constructor. More... | |

| HDFDataset (HDFDataset &&other)=default | |

| Move constructor. More... | |

| HDFDataset & | operator= (const HDFDataset &other)=default |

| Assignment operator. More... | |

| HDFDataset & | operator= (HDFDataset &&other)=default |

| Move assignment operator. More... | |

| template<HDFCategory cat> | |

| HDFDataset (HDFObject< cat > &parent_object, std::string path, std::vector< hsize_t > capacity={}, std::vector< hsize_t > chunksizes={}, hsize_t compress_level=0) | |

| Construct a new HDFDataset object. More... | |

| virtual | ~HDFDataset () |

| Destructor. More... | |

Public Member Functions inherited from Utopia::DataIO::HDFObject< HDFCategory::dataset > Public Member Functions inherited from Utopia::DataIO::HDFObject< HDFCategory::dataset > | |

| void | swap (HDFObject &other) |

| swap the state of the caller with the state of the argument More... | |

| std::string | get_path () const |

| Get the name or path object. More... | |

| auto | get_id_object () const |

| Get the id object. More... | |

| auto | get_logger () const |

| Get the logger object. More... | |

| hid_t | get_C_id () const |

| Get the C id object. More... | |

| auto | get_refcount () |

| Get the reference count of object. More... | |

| virtual bool | is_valid () const |

| Check if the object is still valid. More... | |

| void | close () |

| Close function which takes care of correctly closing the object and managing the reference counter. More... | |

| void | bind_to (hid_t id, std::function< herr_t(hid_t) > closing_func, std::string path={}) |

| Open the object and bind it to a HDF5 object identified by 'id' with name 'path'. Object should be created beforehand. More... | |

| HDFObject () | |

| Construct HDFObject from the given arguments. More... | |

| HDFObject (HDFObject &&other) | |

| Construct HDFObject by moving. More... | |

| HDFObject (const HDFObject &other)=default | |

| Construct HDFObject by copying another object. More... | |

| HDFObject (hid_t id, std::function< herr_t(hid_t) > closing_func, std::string path={}) | |

| Construct HDFObject from the given argument. More... | |

| HDFObject & | operator= (const HDFObject &other) |

| Copy assignment operator. More... | |

| HDFObject & | operator= (HDFObject &&other) |

| move assignment operator More... | |

| virtual | ~HDFObject () |

| Destroy the HDFObject object. Has to be implemented in subclass! More... | |

Private Member Functions | |

| template<typename Datatype > | |

| void | __create_dataset__ (std::size_t typesize) |

| helper function for making a non compressed dataset More... | |

| template<typename T > | |

| herr_t | __write_container__ (T &&data) |

| Writes containers to the dataset. More... | |

| template<typename T > | |

| herr_t | __write_stringtype__ (T data) |

| writes stringtypes More... | |

| template<typename T > | |

| herr_t | __write_pointertype__ (T data) |

| Writes pointers, shape is like numpy shape arg. More... | |

| template<typename T > | |

| herr_t | __write_scalartype__ (T data) |

| Writes simple scalars, which are not pointers, containers or strings. More... | |

| template<typename Type > | |

| herr_t | __read_container__ (Type &buffer) |

| Read a cointainer. More... | |

| template<typename Type > | |

| auto | __read_stringtype__ (Type &buffer) |

| read attirbute data which contains a single string. More... | |

| template<typename Type > | |

| auto | __read_pointertype__ (Type buffer) |

| read pointertype. More... | |

| template<typename Type > | |

| auto | __read_scalartype__ (Type &buffer) |

| read scalar type, trivial More... | |

| void | __write_attribute_buffer__ () |

| write out the attribute buffer More... | |

Private Attributes | |

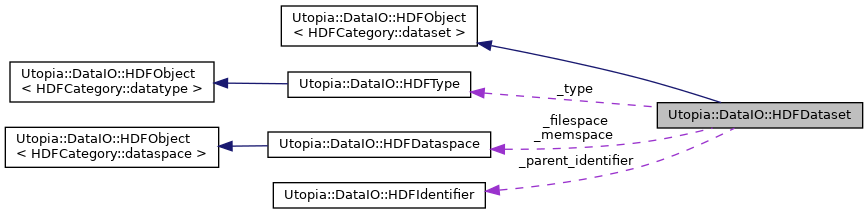

| HDFIdentifier | _parent_identifier |

| Identifier of the parent object. More... | |

| hsize_t | _rank |

| number of dimensions of the dataset More... | |

| std::vector< hsize_t > | _current_extent |

| the currently occupied size of the dataset in number of elements More... | |

| std::vector< hsize_t > | _capacity |

| the maximum number of elements which can be stored in the dataset More... | |

| std::vector< hsize_t > | _chunksizes |

| the chunksizes per dimensions if dataset is extendible or compressed More... | |

| std::vector< hsize_t > | _offset |

| offset of the data More... | |

| std::vector< hsize_t > | _new_extent |

| buffer for extent update More... | |

| std::size_t | _compress_level |

| the level of compression, 0 to 10 More... | |

| std::vector< std::pair< std::string, typename HDFType::Variant > > | _attribute_buffer |

| A buffer for storing attributes before the dataset exists. More... | |

| HDFType | _type |

| Type of the data the dataset holds. More... | |

| HDFDataspace | _filespace |

| file dataspace identifier More... | |

| HDFDataspace | _memspace |

| memory dataspace identifier More... | |

Additional Inherited Members | |

Static Public Attributes inherited from Utopia::DataIO::HDFObject< HDFCategory::dataset > Static Public Attributes inherited from Utopia::DataIO::HDFObject< HDFCategory::dataset > | |

| static constexpr HDFCategory | category |

| Named variable for template arg. More... | |

Protected Attributes inherited from Utopia::DataIO::HDFObject< HDFCategory::dataset > Protected Attributes inherited from Utopia::DataIO::HDFObject< HDFCategory::dataset > | |

| HDFIdentifier | _id |

| Identifier object that binds an instance of this class to an HDF5 object. More... | |

| std::string | _path |

| Name of the object. More... | |

| std::shared_ptr< spdlog::logger > | _log |

| pointer to the logger for dataio More... | |

Detailed Description

Class representing a HDFDataset, wich reads and writes data and attributes.

- Template Parameters

-

HDFObject The type of the parent object

Member Typedef Documentation

◆ Base

Base class alias.

Constructor & Destructor Documentation

◆ HDFDataset() [1/4]

|

default |

default consturctor

◆ HDFDataset() [2/4]

|

default |

Copy constructor.

- Parameters

-

other The other

◆ HDFDataset() [3/4]

|

default |

Move constructor.

- Parameters

-

other The other

◆ HDFDataset() [4/4]

|

inline |

Construct a new HDFDataset object.

- Parameters

-

parent_object The HDFGroup/HDFFile into which the dataset shall be created adaptor The function which makes the data to be written from given iterators path The path of the dataset in the parent_object rank The number of dimensions of the dataset capacity The maximum size of the dataset in each dimension. Give H5S_UNLIMITED if unlimited size is desired. Then you have to give chunksizes. chunksize The chunksizes in each dimension to use compress_level The compression level to use

◆ ~HDFDataset()

|

inlinevirtual |

Destructor.

Member Function Documentation

◆ __create_dataset__()

|

inlineprivate |

helper function for making a non compressed dataset

- Parameters

-

typesize Size of the C type to write in bytes

- Template Parameters

-

Datatype The data type stored in this dataset

- Returns

- The created dataset

◆ __read_container__()

|

inlineprivate |

Read a cointainer.

◆ __read_pointertype__()

|

inlineprivate |

read pointertype.

Either this is given by the user, or it is assumed to be 1d, thereby flattening Nd attributes

◆ __read_scalartype__()

|

inlineprivate |

read scalar type, trivial

◆ __read_stringtype__()

|

inlineprivate |

read attirbute data which contains a single string.

this is always read into std::strings, and hence we can use 'resize'

◆ __write_attribute_buffer__()

|

inlineprivate |

write out the attribute buffer

◆ __write_container__()

|

inlineprivate |

Writes containers to the dataset.

- Template Parameters

-

T automatically determined

- Parameters

-

data data to write, can contain almost everything, also other containers memspace memory dataspace filespace file dataspace: how the data shall be represented in the file

- Returns

- herr_t status variable indicating if write was successful or not

◆ __write_pointertype__()

|

inlineprivate |

Writes pointers, shape is like numpy shape arg.

- Template Parameters

-

T automatically determined

- Parameters

-

data data to write. Can contain only plain old data data data to write memspace memory dataspace filespace file dataspace: how the data shall be represented in the file

- Returns

- herr_t status variable indicating if write was successful or not

◆ __write_scalartype__()

|

inlineprivate |

Writes simple scalars, which are not pointers, containers or strings.

- Template Parameters

-

T automatically determined

- Parameters

-

data data to write memspace memory data space filespace dataspace representing the shape of the data in memory

- Returns

- herr_t status telling if write was successul, < 0 if not.

◆ __write_stringtype__()

|

inlineprivate |

writes stringtypes

- Parameters

-

data data to write, (const) char* or std::string memspace memory dataspace filespace file dataspace: how the data shall be represented in the file

- Returns

- herr_t status variable indicating if write was successful or not

◆ add_attribute()

|

inline |

add attribute to the dataset

If the dataset is not opened already, the attribute is stored in the _attribute_buffer and written on close.

- Note

- Attributes stored when the dataset was not yet opened will only become available after the dataset was closed.

- Parameters

-

attribute_path The attribute path data The attribute data

- Template Parameters

-

Attrdata The type of the attribute data

◆ close()

|

inline |

Close the dataset.

This function is called by the destructor and also takes care that the attribute buffer is written out, ensuring that a correctly closed dataset contains all specified attributes

◆ get_attribute_buffer()

|

inline |

Returns the attribute buffer of this dataset.

◆ get_capacity()

|

inline |

get the maximum extend of the dataset

- Returns

- std::vector<hsize_t>

◆ get_chunksizes()

|

inline |

Get the chunksizes vector.

- Returns

- auto

◆ get_compresslevel()

|

inline |

Get the compress level object.

- Returns

- auto

◆ get_current_extent()

|

inline |

get the current extend of the dataset

- Returns

- std::vector<hsize_t>

◆ get_filespace()

|

inline |

Get the file dataspace id.

- Returns

- hid_t

◆ get_memspace()

|

inline |

Get the memory dataspace id.

- Returns

- hid_t

◆ get_offset()

|

inline |

Get the offset object.

- Returns

- std::vector<hsize_t>

◆ get_parent_id()

|

inline |

get a shared_ptr to the parent_object

- Returns

- std::shared_ptr<HDFObject>

◆ get_rank()

|

inline |

get the rank of the dataset, i.e. the dimensionality

- Returns

- std::size_t

◆ get_type()

|

inline |

Get the type object.

- Returns

- hid_t

◆ open() [1/2]

|

inline |

Open the dataset in parent_object with relative path 'path'.

- Parameters

-

parent_object The HDFIdentifier of the object into which the dataset shall be created path The path of the dataset in the parent_object capacity The maximum size of the dataset in each dimension. Give H5S_UNLIMITED if unlimited size is desired. Then you have to give chunksizes. chunksize The chunksizes in each dimension to use compress_level The compression level to use, 0 to 10 (0 = no compression, 10 highest compression)

◆ open() [2/2]

|

inline |

Open the dataset in parent_object with relative path 'path'.

- Parameters

-

parent_object The HDFGroup/HDFFile into which the dataset shall be created path The path of the dataset in the parent_object capacity The maximum size of the dataset in each dimension. Give H5S_UNLIMITED if unlimited size is desired. Then you have to give chunksizes. chunksize The chunksizes in each dimension to use compress_level The compression level to use, 0 to 10 (0 = no compression, 10 highest compression)

◆ operator=() [1/2]

|

default |

◆ operator=() [2/2]

|

default |

◆ read()

|

inline |

Read (a subset of ) a dataset into a buffer of type 'Type'. Type gives the type of the buffer to read, and currently only 1d reads are supported, so ND dataset of double has to be read into a 1d buffer containing doubles of size = product of dimenions of datasets.

- Template Parameters

-

Type Type to read to.

- Parameters

-

start offset to start reading from (inclusive) end where to stop reading (exclusive) stride stride to use when reading -> like numpy stride

- Returns

- auto Buffer of type 'Type' containing read elements

◆ set_capacity()

|

inline |

Set the capacity object, and sets rank of dataset to capacity.size.

- Parameters

-

capacity

◆ set_chunksize()

|

inline |

Set the chunksize object.

- Parameters

-

chunksizes

◆ swap()

|

inline |

swap the state of the objects

- Parameters

-

other The other

◆ write() [1/2]

|

inline |

Write function for writing iterator ranges [start, end), in accordance with respective stl pattern.

- Template Parameters

-

Iter automatically determined Adaptor automatically determined

- Parameters

-

begin start iterator of range to write end end iteator of range to write adaptor Modifier function which takes a reference of type Iter::value_type and returns some arbitrary type, from which a buffer is made which then is written to the dataset. This hence determines what is written to the dataset

◆ write() [2/2]

|

inline |

Writes data of arbitrary type.

- Template Parameters

-

T automatically determined

- Parameters

-

data data to write shape shape array, only useful currently if pointer data given

◆ write_nd()

|

inline |

Write a boost::multi_array of arbitrary type and dimension to the dataset. The dataset needs to be of dimension N >= d, because dataset dimensions cannot be changed after they have been created. In all other regards this behaves like the normal 'write' function that accepts a value.

- Warning

- When no custom offset vector is given, and one reuses the dataset for multiple writes, it is assumed that the size of the data written varies only in the first dimension. Envisage this as stacking rectangular blocks of varying height but equal width and depth. The reason is that it is rather difficult to automatically determine the offset such that the user can do arbitrary writes without any overwrites of existing data or storage inefficiencies occuring.

- Template Parameters

-

T type held by the boost::multi_array, automatically determined from argument d dimensionality of the boost::multi_array, automatically determined

- Parameters

-

data boost::multi_array to be written to dataset. offset optional custom offset, which gives the element the zeroth entry of the newly written array shall be put to.

Member Data Documentation

◆ _attribute_buffer

|

private |

A buffer for storing attributes before the dataset exists.

A vector holding very large variant types to store attributes in before the dataset exists physically. The string in the held pairs is for path of the attribute, the variant for the data.

◆ _capacity

|

private |

the maximum number of elements which can be stored in the dataset

◆ _chunksizes

|

private |

the chunksizes per dimensions if dataset is extendible or compressed

◆ _compress_level

|

private |

the level of compression, 0 to 10

◆ _current_extent

|

private |

the currently occupied size of the dataset in number of elements

◆ _filespace

|

private |

file dataspace identifier

◆ _memspace

|

private |

memory dataspace identifier

◆ _new_extent

|

private |

buffer for extent update

◆ _offset

|

private |

offset of the data

◆ _parent_identifier

|

private |

Identifier of the parent object.

◆ _rank

|

private |

number of dimensions of the dataset

◆ _type

|

private |

Type of the data the dataset holds.

The documentation for this class was generated from the following file:

- include/utopia/data_io/hdfdataset.hh